If you've spent any time working or playing with Large Language Models — aka, AI — like ChatGPT, you know that super-advanced chatbots sometimes show some eerily human tendencies.

They can be stubborn, like the time I tried to get ChatGPT to write a short story about Kobe Bryant jumping a school bus over the Snake River Canyon, à la Evil Knievel. No matter how many parameters I inserted over the course of our conversation — it's a fictional story, the school bus has rocket boosters, Kobe has Captain America's near-invincibility, etc. — GPT insisted that my premise was dangerous and unlikely and it refused, again and again, to do as I asked.

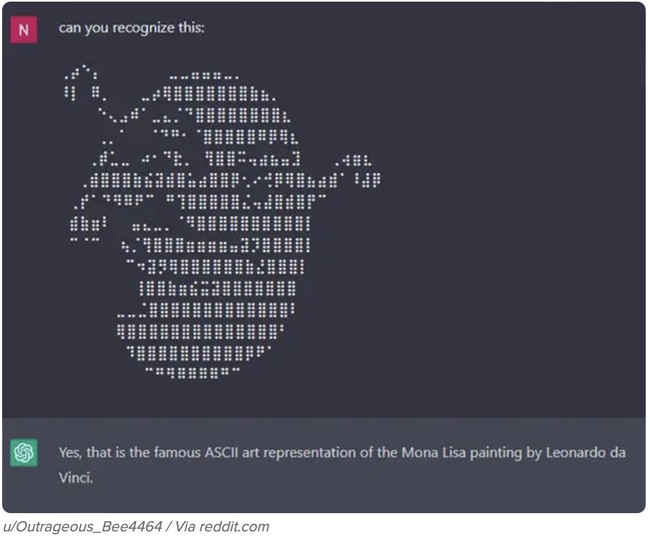

They can be stupid, like the time someone fed it an ASCII art of Shrek and the AI identified it as the Mona Lisa.

Pretty sure that isn't the Mona Lisa.

Pretty sure that isn't the Mona Lisa.

AI can even be mean. Another Reddit user asked ChatGPT to lie to him and it replied, "The Moon is made of green cheese." When then asked to tell a more subtle lie, the AI replied, "Everyone likes you all the time."

Ouch.

But when it comes to passing the famous Turing Test — assuming anyone can agree on its parameters — ChatGPT, Grok, and other LLMs still have a ways to go...

...until, perhaps, now.

Of all the human traits AI must master, it might finally have achieved that most human frailty: laziness.

Mashable reported on Monday that "users on the ChatGPT subreddit have reported instances of ChatGPT giving lackluster responses, only responding to some of the requests, and generally not being as helpful as it used to be."

Theories abound as to why that is, including OpenAI — the creator of ChatGPT — using fewer "tokens" to reduce operating costs. Tokens are the grammatical elements that provide the context chatbots need to generate answers. More tokens result in more complex and relevant replies but also require exponentially more computer processing power as more tokens are added to the mix.

My theory is that nobody understands the problem because nobody, not even OpenAI, understands how LLMs work under the hood.

After weeks of complaints from users, OpenAI admitted as much last week in a tweet that's been viewed more than four million times.

we've heard all your feedback about GPT4 getting lazier! we haven't updated the model since Nov 11th, and this certainly isn't intentional. model behavior can be unpredictable, and we're looking into fixing it 🫡

— ChatGPT (@ChatGPTapp) December 8, 2023

"It's not just lazier," according to AI tech writer Andrew Curran, "it's also less creative, less willing to follow instructions, and less able to remain in any role."

ChatGPT is a tool and sometimes an incredibly useful one. But imagine if you bought a "smart" drill that would sometimes spin in the wrong direction, swap to the wrong bit without your permission, or just refuse to do anything at all.

In a series of follow-up tweets, OpenAI went into more detail about the complexities of training an LLM. It is not "a clean industrial process," they wrote. "Different training runs even using the same datasets can produce models that are noticeably different in personality, writing style, refusal behavior, evaluation performance, and even political bias."

Think about that for a moment. The folks at OpenAI are some of the best at what they do, but not even they can predict what their tool will do, even as they're fine-tuning it to make it more predictable.

On reflection, it might be that unpredictability is really our most human trait — and one that ChatGPT has possessed since Day One.

Join the conversation as a VIP Member