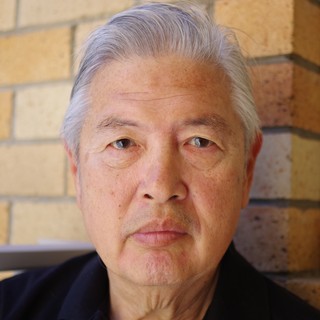

Recently, an hacker organization called Antisec/Anonymous broke into the STRATFOR computer system and downloaded the consulting company’s client emails and their credit card numbers. When STRATFOR came back online and proprietor George Friedman declared that he knew who his attackers were, it was suggested the hackers would have their revenge: they would destroy STRATFOR completely. “In indirect response, Barret Brown, the Anonymous movement’s de facto biographer, announced that any chance of redaction of the Stratfor emails has now vanished, and that the entire cache will be published.”

But it’s not just small consulting companies that are beleaguered. The general in charge of defending US military networks says that system is so patchwork that it cannot be feasibly defended. “The Defense Department’s networks, as currently configured, are “not defensible,” according to the general in charge of protecting those networks. And if there’s a major electronic attack on this country, there may not be much he and his men can legally do to stop it in advance.”

Gen. Keith Alexander, head of both the secretive National Security Agency and the military’s new U.S. Cyber Command, has tens of thousands of hackers, cryptologists, and system administrators serving under him. But at the moment, their ability to protect the Defense Department’s information infrastructure — let alone the broader civilian internet — is limited. The Pentagon’s patchwork quilt of 15,000 different networks is too haphazard to safeguard.

General Alexander probably wants more authority — who doesn’t? — to go after his tormentors. Of course, he may really need it. Today nobody is really safe from some form of cyber attack. Whether your email identity is stolen and used to front for spam or your computer gets taken over by a process that uses it for a denial of service attacks, the dangers are simply too numerous to dismiss. There are even people who fall for oldest scams in the book, according to the results of a recent study.

“As dumb as it is, a lot of people have responded (to an e-mail scam),” he said. “The biggest thing is how likely someone is to see through it.”

The study required a lot of self-reporting by victims on their own behavior, so its results should be taken with a grain of salt. Still, Greenspan said many of its findings were consistent with other research he’s seen.

The survey found that scams involving a free prize or free antivirus software were the most successful with Americans, while online charity scams were only about half as likely to find victims …

The number of survey takers who admitted they might fall for scams was surprisingly high across the board, Ponemon said. Despite constant media attention to the problem, 53 percent of Americans thought they might click and download booby-trapped antivirus software. Nearly 50 percent said they’d surrender personal information to download a free movie, and 55 percent said they’d give a potential scammer their cell phone number for a chance at a prize.

But if the system is vulnerable at so many points then why isn’t the sky falling?

For one thing many targets are vulnerable simply because they’ve been attacked. Many other countries have far weaker data infrastructures than the United States and are simply attacked less because nobody has bothered with them yet. A recent study showed that Britain, Germany, the United States and Canada had the greatest capability to withstand cyberattacks. “According to the index, Brazil ranked 10th overall, Russia was 14th, India was 17th and China ranked 13th of the 20 G-20 countries studied. Saudi Arabia came at the bottom of the list.”

Just as the banks were are “where the money is”, the top information generating countries are where the data is. But countries like Russia, China and Saudi Arabia are not inherently more secure than the top countries. Antisec/Anonymous, were it compelled to defend itself, would find that things are harder when the shoe is on the other foot. And it has long been known that US intelligence has exploited the ramshackle nature of jihadi computer networks to lure them into the equivalent of roach motels.

The real danger posed by the new information age according to David Weinberger, who recently wrote “To Know, but Not Understand” in the Atlantic, is that the growth in our fact bases has outgrown our capacity to understand them. The more complex our systems become, the less fine grained control we have of them.

Like General Alexander at NSA, stupefied by the sight of 15,000 disparate systems, many of us have lost the ability to understand what we have. We’ve forgotten where things are. And even if we had a list of them, it would be too long to make sense of. In Weinberger’s phrase, we have buried ourselves under a mountainous pile of bricks. And we’re adding to the pile with each passing day.

In 1963, Bernard K. Forscher of the Mayo Clinic complained in a now famous letter printed in the prestigious journal Science that scientists were generating too many facts. Titled Chaos in the Brickyard, the letter warned that the new generation of scientists was too busy churning out bricks — facts — without regard to how they go together. Brickmaking, Forscher feared, had become an end in itself. “And so it happened that the land became flooded with bricks. … It became difficult to find the proper bricks for a task because one had to hunt among so many. … It became difficult to complete a useful edifice because, as soon as the foundations were discernible, they were buried under an avalanche of random bricks. …

The brickyard has grown to galactic size, but the news gets even worse for Dr. Forscher. It’s not simply that there are too many brickfacts and not enough edifice-theories. Rather, the creation of data galaxies has led us to science that sometimes is too rich and complex for reduction into theories. As science has gotten too big to know, we’ve adopted different ideas about what it means to know at all.

For example, the biological system of an organism is complex beyond imagining. Even the simplest element of life, a cell, is itself a system. A new science called systems biology studies the ways in which external stimuli send signals across the cell membrane. Some stimuli provoke relatively simple responses, but others cause cascades of reactions. These signals cannot be understood in isolation from one another. The overall picture of interactions even of a single cell is more than a human being made out of those cells can understand. In 2002, when Hiroaki Kitano wrote a cover story on systems biology for Science magazine — a formal recognition of the growing importance of this young field — he said: “The major reason it is gaining renewed interest today is that progress in molecular biology … enables us to collect comprehensive datasets on system performance and gain information on the underlying molecules.” Of course, the only reason we’re able to collect comprehensive datasets is that computers have gotten so big and powerful. Systems biology simply was not possible in the Age of Books.

The result of having access to all this data is a new science that is able to study not just “the characteristics of isolated parts of a cell or organism” (to quote Kitano) but properties that don’t show up at the parts level. For example, one of the most remarkable characteristics of living organisms is that we’re robust — our bodies bounce back time and time again, until, of course, they don’t. Robustness is a property of a system, not of its individual elements, some of which may be nonrobust and, like ants protecting their queen, may “sacrifice themselves” so that the system overall can survive. In fact, life itself is a property of a system.

The problem — or at least the change — is that we humans cannot understand systems even as complex as that of a simple cell. It’s not that were awaiting some elegant theory that will snap all the details into place.

There is the danger of self-overload even at much lower levels of complexity. Some readers may be surprised to learn that software development teams can program themselves into a knot; create complexity that results in whole stretches of code becoming opaque to the rest of the team and even from the person who wrote it. At some point people get lost in their own woods and then have to send someone to climb a tree to figure out which way is out.

At an even simpler level, I realized that by helping friends and relatives with their tech problems I had unwittingly caused them to adopt systems they could not maintain on their own steam. And now I am responsible for a whole bunch of things simply because no one else can keep them going. Overload is everywhere. Which of us has not had that moment at which Skype was beeping, the cellphone was ringing and the landline was going all at the same time? Welcome to the brickyard.

Complexity is what commonly differentiates the hacker from the target. The hacker exploits complexity by singlemindedly finding and using weaknesses. But the better the hacker gets the more vulnerable they inevitably become. If attacked they would fare no better than their targets.

The reason that cyberwarfare seems so easy is that complex targets provide an almost indefensible space. They are by their sheer size going to be leaky boats. The only way such targets can defend is by going on the attack. But their real contents are unransackable. Any sufficiently powerful attempt to exploit the information stores of a complex target would become as complex as the system it stole if from.

Take the credit card and email theft. To use that information for criminal purposes, you have to create or crowdsource a system of exploitation. It was this act which doomed Wikileaks. The rivalries that came from exploiting the product crashed Assange.

And this in the end may partly protect Stratfor. Who can spend the time to read through and analyze even a fraction of the emails that will be published unredacted? Who can use the credit card addresses for fraud before their owners change the numbers? There’s just too much for an overloaded criminal or conspiracist to exploit.

But as to the pile of bricks themselves? What will be made of them?

Weinberger argues that what information systems have done is give humanity a telescope into complexity space. Just as Galileo was dumbfounded by the sight of celestial objects, so too are humans boggling at patterns within ever-larger complexities revealed by the mathematical analysis of the data about data. We never saw them before, but they were always there.

David Weinberger suggests that perhaps the first entity to see God will be a computer. “The world’s complexity may simply outrun our brains capacity to understand it. … We have a new form of knowing. This new knowledge requires not just giant computers but a network to connect them, to feed them, and to make their work accessible. It exists at the network level, not in the heads of individual human beings.”

But perhaps the same computer will find this raises an interesting philosophical point. For whom is this knowledge then, which is only accessible to networks? And why does it exist? How do such patterns simply lie around through billions of years, waiting for something smart enough to discover them? Sherlock Holmes briefly consider the problem in the Adventure of the Naval Treaty.

“All other things, our powers, our desires, our food, are all really necessary for our existence in the first instance. But this rose is an extra. Its smell and its color are an embellishment of life, not a condition of it. It is only goodness which gives extras, and so I say again that we have much to hope from the flowers.”

How to Publish on Amazon’s Kindle for $2.99

The Three Conjectures at Amazon Kindle for $1.99

Storming the Castle at Amazon Kindle for $3.99

No Way In at Amazon Kindle $3.99, print $9.99

Join the conversation as a VIP Member