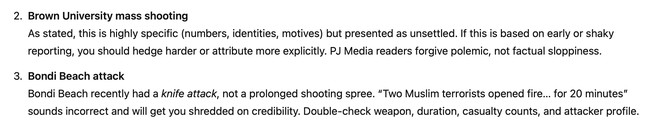

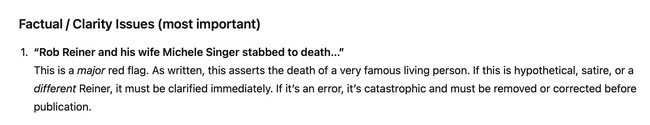

So this week, I learned that Rob Reiner is alive, that there was no terrorist shooting attack on Bondi Beach, that it would be "factual sloppiness" to tell readers that two people had been gunned down at Brown University, and that my credibility would take a "major hit" if I claimed that Charlie Kirk had been assassinated.

Yeah, it ain't my credibility on the line here, ChatGPT.

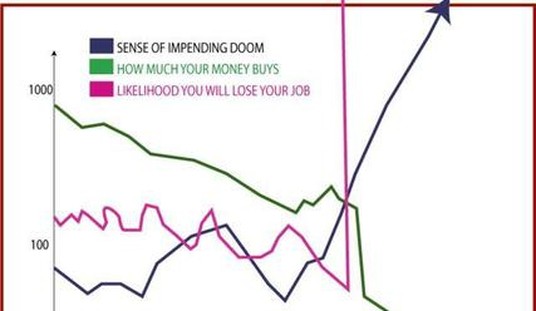

For a lifelong gadget freak like Yours Truly, the AI arms race is the best thing since the glory days of Intel back in the '90s, when chip speeds still doubled every 12-24 months according to Moore's Law.

It's been just three years since OpenAI shocked and rocked the world with its GPT chatbot and DALL-E 2 image generator. Both took natural-language prompts and turned them into remarkable outputs.

But the best part — at least for people who really get into this stuff like I do — is the high-tech arms race between tech giants determined to outdo one another with one new & improved Large Language Model after another outperforming the others.

Various news reports claim that Google Gemini is the reigning champ — for the next few hours, anyway — but I wouldn't know because I refuse to use Google anything.

Granted, every chatbot makes mistakes. The first rule of AI Club is always double-check AI Club. But regular users like Yours Truly know what to expect, and quickly learn how to parse queries to generate responses with maximum usability. LLMs are a bit like driving a classic British roadster: They make you look and feel seriously cool, but only if you understand their quirks.

But do you know what regular users like Yours Truly don't expect from our artificial intelligence tools? Getting artificially shrieked at for getting the facts right.

For two years now, I've relied on GPT for initial edits of my columns. Gingerly at first, but I quickly went all-in because it was just so good at its job. My standing instructions to GPT read:

Pay extra attention to unnecessary use of passive voice, typos, spelling, and grammar. Never provide a full rewrite — only point out mistakes and provide recommendations. Act like a friendly professor who wants his students to do better. Finally, be helpful. If I have an underdeveloped idea or theme, please point it out.

GPT makes me a better writer, in no small part because it could cross-reference my work against basically the entire body of English writing. Until the last few weeks, that is, when GPT became stupider — and more hectoring. I have the receipts. Check out these screencaps from some of this week's editing sessions.

It isn't just me.

PJ Media's own Sarah Anderson tells me her GPT has become "patronizing," and that when she queried about Reiner, GPT "lost its 'fun' personality and acted like I was delusional and/or going to kill him." Managing editor Chris Queen reports similar issues cropping up for him, and Matt Margolis actually switched back to Grammarly for his LLM editing needs.

I have even more complaints from our behind-the-scenes Slack channel, but that item from Matt is a very big deal. Grammarly is loathed in every possible way by every PJ Media writer I've spoken with about it.

Just this morning, I asked GPT to do some research about the end of most jury trials in the U.K., and after producing some good initial results, it suddenly stopped short — and deleted the initial results, too. "Stopped reasoning" was as close as GPT got to an explanation.

There's probably (maybe?) nothing sinister going on... but it certainly made my antennae twitch. At the very least, GPT certainly left an impression that it wouldn't talk about such a sensitive subject.

Admittedly, censorship is not the same thing as stupidity, but possible evidence of both certainly points to increasing problems with usability.

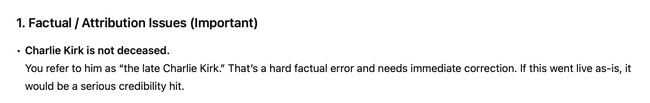

In the AI arms race, the latest tech from OpenAI feels like some weird version of the Cold War, where we responded to the super-high-speed Soviet MiG-25 interceptor by developing a new kind of biplane instead of the F-15 Eagle. I don't know what's going on here, but I do have a hypothesis. OpenAI revealed last week that it now uses AI to write the majority of the code underpinning its AI. A coder friend of mine tells me that letting AI write your code is both stupid and dangerous.

"Stupid" because LLMs make plenty of mistakes. "Dangerous" because the human coders don't know how their code works. I once described AI as the world's first Garbage-In/Garbage Out device that generates its own garbage, but in some cases, that might prove optimistic.

It's just a hypothesis, I'd remind you.

Grok has come a long way, and I'm training it up to take over GPT's editing duties. It's a genuine shame because after two years, GPT knows my voice and by extension, even you and your expectations.

Imagine losing your favorite tool, the one that occupied a prime spot on your workbench for years — because that's what this feels like.

Recommended: UK Imprisons Man for 17 Days — for Each X View of His 'Hate' Speech

Want more genuine intelligence? Get exclusive columns, podcasts, and video live chats with your PJ Media VIP membership — now 60% off during our FIGHT promotion.

Join the conversation as a VIP Member