AI — like East Asians, incidentally, but that’s a story for another day — has a satire problem.

For instance, were it written it today, AI trained to censor unapproved speech would likely flag Jonathan Swift’s treatise on eating Irish babies as a means of alleviating the Irish Potato Famine — one of the first, if not the first, iterations of satire as a literary genre — as hate speech or some other designation. One of the most celebrated works in history would have subsequently been memory-holed into the algorithmic gulag, never to be seen.

Related: AI Might Rather Kill a Billion White People Than Utter a Racial Slur

Given the intense interest of the corporate state in using AI to bolster its various censorship schemes, it would seem that separating satire from sincere speech would be paramount, unless sweeping all speech that might be verboten under the rug — even that which is meant to undermine said verboten speech by pointing out logical fallacies or hypocrisies — is chalked up to the cost of doing business.

Via Venture Beat (emphasis added)

Efforts to reduce the spread of misinformation have occasionally resulted in the flagging of legitimate satire, particularly on social media. Complicating matters, some fake news purveyors have begun masquerading as satire sites*. These developments of course threaten the business of legitimate publishers, which might struggle to monetize their satire, but also they affect the experience of consumers, who could miss out on miscategorized content.

Much satire, by definition, would fall under the ever-expanding umbrella of “fake news.” The Babylon Bee and The Onion publish ridiculous reimaginings of current news items; that’s their entire function.

To solve the imaginary “misinformation” vs satire problem that threatens Democracy™ or whatever, George Washington University developed a convoluted study design to use computers to distinguish between the two at scale:

The researchers leveraged a statistical technique called principal component analysis to convert potentially correlated metrics into uncorrelated variables (or principal components), which they used in two logistic regression models (functions that model the probability of certain classes) with the fake and satire labels their dependent variables. Next, they evaluated the models’ performance on a corpus containing 283 fake news stories and 203 satirical stories that had been verified by hand.

The team reports that a classifier trained on the ‘significant’ indices outperformed the baseline F1 score, a measure of the frequency of false positives and negatives. The top-performing algorithm achieved a 0.78 score, where 1 is perfect, while revealing that satirical articles tended to be more sophisticated (and less easy to read) than fake news articles.

In future work, the researchers plan to study linguistic cues such as absurdity, incongruity, and other humor-related features.

‘Overall, our contributions, with the improved classification accuracy and toward the understanding of nuances between fake news and satire, carry great implications with regard to the delicate balance of fighting misinformation while protecting free speech,’ they wrote.

Here's a wild idea, yet one that offers the simplest possible solution to the censorship-of-satire-by-AI problem: how about we respect not just the letter of the law, but the spirit of the First Amendment and actually allow free speech on the web?

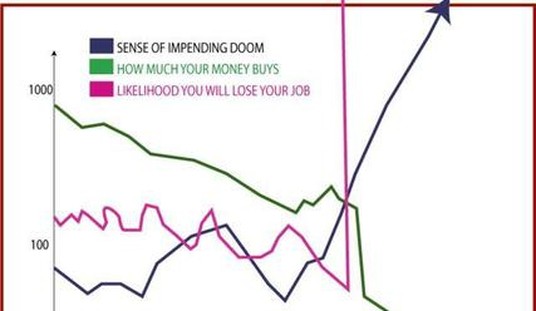

Imagine how much actual good in the world all of these data scientists with their sophisticated logistic regression analyses applied themselves to solving real-world problems instead of refining the corporate state’s censorship regimes or figuring out how to impregnate men for gender equity.