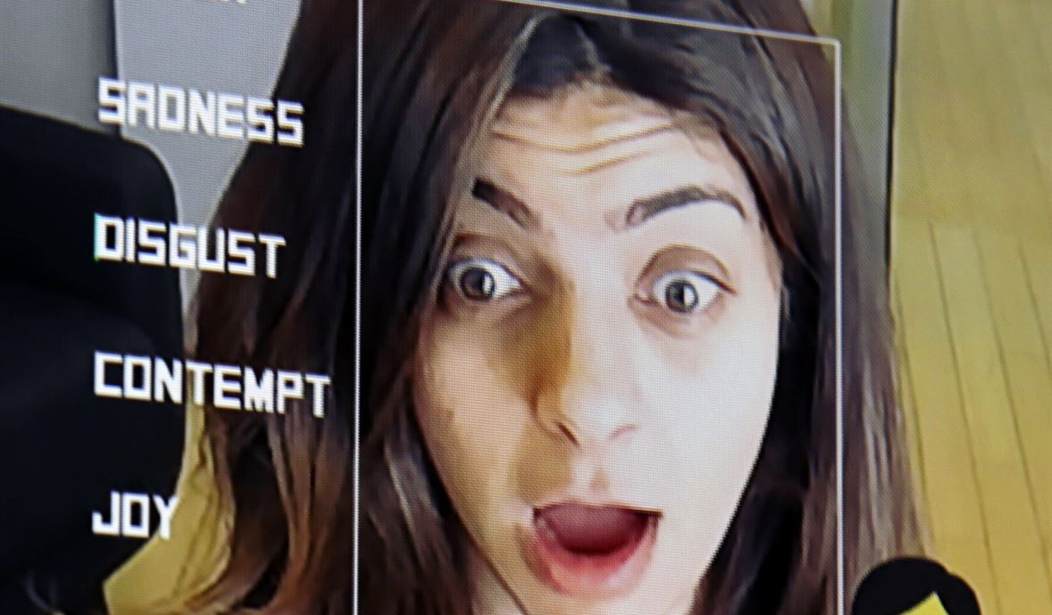

Artificial intelligence is changing the way we work. It's changing the way we play. But as we race toward this uncertain future, the legal, moral, and ethical questions about where and how to use AI are multiplying at an astonishing speed.

There has been an explosion of "undressing" apps in the last two years. AI has become so sophisticated that the deepfake technology available to ordinary people just a few years ago has been surpassed several times over.

It's eerie, scary, and if it happens to you, enraging.

Creating pornographic images of an adult is not a crime. Distributing it on the internet is legal. The images are usually stolen from social media accounts and distributed without the consent, control, or knowledge of the subject.

The social network analysis company Graphika found that 24 million people accessed one of these undressing websites in September alone. Most of the apps allow you to access the technology for a monthly fee.

Graphika discovered that "since the beginning of this year, the number of links advertising undressing apps increased more than 2,400% on social media, including on X and Reddit," according to Time Magazine.

“You can create something that actually looks realistic,” said Santiago Lakatos, an analyst at Graphika, noting that previous deepfakes were not as clear as current images.

Many of the people in the images are likely not aware of their photographs being doctored in this way and AI deepfakes are known to be used to victimize young girls and women. As The Messenger reported earlier this year, at one high school in Westfield, New Jersey, teenage girls are advocating for protections after their own classmates circulated AI-generated nude images of them around school.

Ultimately, the Graphika analysts caution that "the increasing prominence and accessibility of these services will very likely lead to further instances of online harm, such as the creation and dissemination of non-consensual nude images, targeted harassment campaigns, sextortion, and the generation of child sexual abuse material."

“We are seeing more and more of this being done by ordinary people with ordinary targets,” said Eva Galperin, director of cybersecurity at the Electronic Frontier Foundation. “You see it among high school children and people who are in college.”

Just this month, a North Carolina child psychologist was sentenced to 40 years in prison for using undressing apps on photos of his patients. This was the first prosecution under a new law making it illegal to create naked images of minors.

As usual, the law is struggling to catch up with technology. There are few rules to protect people from this cruel invasion of privacy. Because of that, it's up to social media companies to police their sites.

I'm not confident.

TikTok has blocked the keyword “undress,” a popular search term associated with the services, warning anyone searching for the word that it “may be associated with behavior or content that violates our guidelines,” according to the app. A TikTok representative declined to elaborate. In response to questions, Meta Platforms Inc. also began blocking key words associated with searching for undressing apps. A spokesperson declined to comment.

Using deepfake technology to undress women is a travesty, but using deepfake tech to put words in the mouths of leaders and spread disinformation is not far away. AI may eventually be so tightly controlled that its usefulness will be diminished.

That would be a sad day for all of us.

Join the conversation as a VIP Member