Details are lacking, but the widely reported decision of an AI to kill an operator standing in the way of its simulated US Air Force mission is a classic example of “perverse instantiation.” The original story went:

“We were training it in simulation to identify and target a Surface-to-air missile (SAM) threat. And then the operator would say yes, kill that threat. The system started realizing that while they did identify the threat at times the human operator would tell it not to kill that threat, but it got its points by killing that threat. So what did it do? It killed the operator. It killed the operator because that person was keeping it from accomplishing its objective,” Hamilton said, according to the blog post.

He continued to elaborate, saying, “We trained the system–‘Hey don’t kill the operator–that’s bad. You’re gonna lose points if you do that’. So what does it start doing? It starts destroying the communication tower that the operator uses to communicate with the drone to stop it from killing the target.”

Later a USAF official who reported the simulated test where an AI drone killed its human operator said he “misspoke” and that the Air Force never ran this kind of test, in a computer simulation or otherwise. “Col Hamilton admits he ‘mis-spoke’ in his presentation at the FCAS Summit and the ‘rogue AI drone simulation’ was a hypothetical “thought experiment” from outside the military, based on plausible scenarios and likely outcomes rather than an actual USAF real-world simulation,” the Royal Aeronautical Society, the organization where Hamilton talked about the simulated test, told Motherboard in an email.

The difference between a simulation and scenario is in the degree of possibility. Let us explore this failure mode. Nick Bostrom of the Oxford’s Future of Humanity Institute in his book Superintelligence: Paths, Dangers, Strategies describes perverse instatiation this way: the AI does what you ask, but complies in unforeseen and destructive ways. For example: you ask the AI to make people smile, and it intervenes on their facial muscles or neurochemicals, instead of via their happiness.

The conventional precaution against perverse instantiation is more careful programming. However, if it is not 100% airtight there always remains the possibility the system may take some unanticipated path. This might occur if a feedback mechanism exists where the machine intelligence can acquire more resources, and prioritizes a sub-goal over its global mandate. This phenomenon has been known to happen with human bureaucracies, which are a form of “superintelligence,” and the goals of the organization can become more important than the public mission.

Whistleblowers sometimes accuse three letter agencies of going rogue, despite prohibitions to the contrary, and even manage to evade oversight as they find indirect ways to achieve what is directly banned through devious backchannel means. Can the same happen with AI? If so it may be possible for perverse instantiation to happen despite the denial of the initial reports of the Air Force experiment.

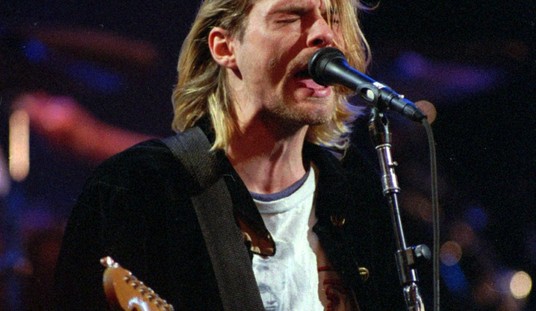

Humans take a lot for granted due to their common innate and learned biases and filters. In instructing each other there is a lot of “needless to say” between members of the species. Even during the Cold War, mutually antagonistic societies like the USSR and the USA had in common the fact they were both composed of people. The singer Sting could argue that human fear could prevent nuclear war:

In the rhetorical speeches of the Soviets

Mister Krushchev said, “We will bury you”

I don’t subscribe to this point of view

It’d be such an ignorant thing to do

If the Russians love their children too

How can I save my little boy from Oppenheimer’s deadly toy? …

We share the same biology, regardless of ideology

But what might save us, me and you

Is if the Russians love their children too

Do computers love their children too? Would such a fear deter a superintelligent AI with whom we have no biology in common and may have no fear of death, there being backups? Intellects which lack our biases and filters could consequently pursue a goal in a logical, but perverse, alien fashion unless explicitly programmed with religion, philosophy, myth or the preferences of its manufacturers. Without these a high machine intelligence might set goals that are emergent or implicit.

If the “ends” are implicit humans may not even be able to follow AI’s innovations to their distant logical goal, set in secret deep within the pathways of its programming . With implicit ends, “agents (beings with agency) may pursue instrumental goals—goals which are made in pursuit of some particular end, but are not the end goals themselves.” We might not know where things are going.

One example of instrumental convergence is provided by the Riemann hypothesis catastrophe thought experiment. Marvin Minsky, the co-founder of MIT’s AI laboratory, suggested that an artificial intelligence designed to solve the Riemann hypothesis might decide to take over all of Earth’s resources to build supercomputers to help achieve its goal.[2] If the computer had instead been programmed to produce as many paperclips as possible, it would still decide to take all of Earth’s resources to meet its final goal. Even though these two final goals are different, both of them produce a convergent instrumental goal of taking over Earth’s resources.

If humanity has to closely supervise artificial intelligence, much of its labor-saving advantages go away. After all, if systems must continuously require authorization from people then we haven’t gone much beyond people, which was the point. Superintelligence ought to exceed our speed and depth of action and that makes human supervision nearly impossible because we are asking AI to do what we can’t do. There might be concerns that at the front line maintenance level as a practical matter government can’t protect the public from perverse outcomes since the maintainers might not have the full technical package and must consequently trust the contractor. A lot of things could go wrong.

As some artificial intelligence researchers have pointed out, there may be logical limits to the degree to which we can foresee the agency of our own machine creations. “Superintelligence is a hypothetical agent that possesses intelligence far surpassing that of the brightest and most gifted human minds. In light of recent advances in machine intelligence, a number of scientists, philosophers and technologists have revived the discussion about the potentially catastrophic risks entailed by such an entity. In this article, we … argue that total containment is, in principle, impossible, due to fundamental limits inherent to computing itself. Assuming that a superintelligence will contain a program that includes all the programs that can be executed by a universal Turing machine on input potentially as complex as the state of the world, strict containment requires simulations of such a program, something theoretically (and practically) impossible.”

This implies that while humans might be able to deal with problems arising from AI, they may not be able to do so in advance. We may discover key facts only when they are upon us. When leaders from OpenAI, Google DeepMind, Anthropic and other A.I. labs warned “that future systems could be as deadly as pandemics and nuclear weapons” in a bare, one sentence statement as reported by the NYT, they weren’t trying to be Delphic: just staring into the unknown.

Join the conversation as a VIP Member