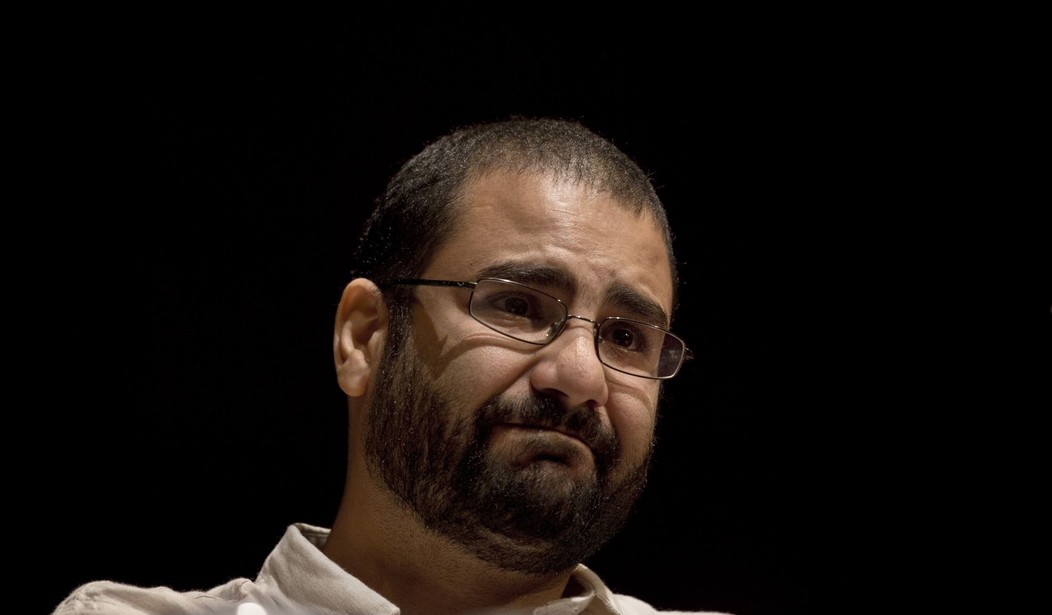

If we examine the question of why the British government spent so much time and effort to spring a radical Egyptian Islamist from jail, give him British citizenship, and conduct him, with great ceremony, “back” to Britain—only to discover their hero had actually called for the killing of the white race, the assassination of police and Jews, and had asserted that Britons were “dogs and monkeys,” before retreating in embarrassment—we have to dive into the way ideologies think. Otherwise as Khalid Hassan put it, it is exceedingly hard to imagine how such a catastrophe could have happened:

Britain finds itself in an utterly unprecedented and deeply humiliating situation, one that has made it a global laughing stock. Only in Britain could you witness the years-long campaign to pressure a country into releasing an extremist, only for that same nation, its politicians and media, to immediately fixate on sending him back. This farce unfolds while our Prime Minister, Foreign Secretary, and Minister for the Middle East have gone into hiding, having just welcomed this extremist, who has never lived here, "back" to Britain. The entire episode is so profoundly bizarre and without precedent that I struggle to convey the sheer astonishment with which my contacts across the Arab world are observing it.

But at least one plausible explanation for similar fiascos has long been known to software developers: the explanation is slop, content made with artificial intelligence, something you trust enough to cut and paste and that turns out to be spectacularly wrong. As many know, large language models can hallucinate or conclude things that aren’t true because the model projects it. This is called "slop."

LLMs don’t process language the way humans do. They break input into tokens or chunks of text that may represent a word, part of a word, or punctuation. Each response is generated one token at a time, with the model predicting the most statistically likely next token based on everything it has seen so far. This prediction is not guided by meaning or intent, but by patterns learned from training data. The appearance of coherence comes from millions of these token-level guesses stitched together, not from any actual comprehension of the sentence being formed.

Can ideologies produce slop? There is no reason why they can't. First of all institutions (of which ideologies are a subset) are a type of "machine intelligence." Like the silicon variety, they are not natural organisms, but man-made. Like computers, bureaucratic collective intelligence (BCI) is driven by algorithms and machine learning bureaucracies in the form of rules. Institutions have embedded biases and forbidden statements just like large language models do. They can imagine things just like AI can. Comparing ideological thinking to the "hallucinations" of Large Language Models (LLMs) is a surprisingly useful analogy because just as artificial intelligences systems can generate plausible but ungrounded information based on internal patterns rather than objective reality so also can ideological collectives.

Developers know that LLMs can generate coherent, confident, and syntactically correct text that is logically or contextually inaccurate. Similarly, ideologies often construct plausible and fluent narratives to explain complex events that have nothing whatsoever to do with the actual facts. LLMs prioritize predicting the next likely token based on statistical associations in training data. They are like a driver controlling his car through the rear view mirror. Ideologies often operate similarly, interpreting new information only through the lens of existing, rigid frameworks rather than adjusting the framework to fit new facts. The Egyptian Islamist was a noble, freedom fighting person of color because he had to be.

Imagine you’re a British political operative working under a tight deadline, and you have your reliable Talking Points application by your side, auto-completing statements, suggesting racist conspiracies, polishing turns of phrase. Encomiums of Alaa turn into press articles almost effortlessly. It feels like magic—until the floor collapses under you. Keir Starmer now says he never knew the man he was so "delighted" to welcome into the UK wished the Jews dead and regarded Britons as "dogs and monkeys". How could he have been so badly deceived? The answer is he swallowed the ideological slop.

The process of camouflaging Alaa Abd el-Fattah began in 2014, when the European United Left/Nordic Green Left (GUE/NGL) group in the European Parliament nominated Alaa Abd El-Fattah for the Sakharov Prize for Freedom of Thought. Fortunately the Sakharov Committee did due diligence on Alaa and on October 1, 2014, the GUE/NGL group withdrew the nomination upon the discovery of Alaa's candid statements. Group President Gabi Zimmer stated that they had been unaware of the tweets when proposing him. This did not alert Amnesty International, PEN America and the Electronic Frontier Foundation, all of which lauded Alaa but none of which have commented on the controversial social media posts. One can only conclude that they too were unaware of his views and may not even be aware of them now. The process that transpired —improving or "polishing" the reputation of a controversial, unethical, or outright villainous figure by linking them to noble, moral, humanitarian, or "holy" causes—is commonly known as reputation laundering. It is under these clean sheets that Starmer was apparently taken in.

Alaa was a perfectly plausible package – until he wasn’t. Once Keith Starmer looked closely, his hero turned out to be a complete hallucination. Nor is Alaa Abd El-Fattah an isolated case. The use of ideological agents and templates is so widespread it constitutes a supply-chain threat in the public discourse. When ideologies hallucinate or install unverified packages of facts they can make monumental errors that deceive even the so called experts. As Mark Twain put it, “It ain’t what you don’t know that gets you into trouble. It’s what you know for sure that just ain’t so.”