Ever since Hal, the maniacal computer in the film 2001: A Space Odyssey, decided he wanted to wear the pants in the Universe, the fear that super-intelligent computers would start bossing man around has been with us. The latest iteration is Elon Musk and a group of big-foot geniuses from Future of Life Institute calling for a pause in Artificial Intelligence research for six months. They think we need to face up to its dangerous implications.

But Bill Gates, another purported genius who also manages to be wrong about almost everything, says full steam ahead on research. AI is the greatest thing since — well, Hal. Alas, Gates, if he had been an officer in the old French Army, would have had the lowest grade for promotion. They recognized the danger of men with energy, drive, and in this case, money, who had a knack for making bad things even worse.

The fact is, it may be too late to reign in AI. Consider our tragic failure to impose strict ethical considerations on the burgeoning biotech industry. Eventually, mad scientists playing in their labs caused millions to die in the 2020-23 pandemic. So much for the pure libertarian refusal to set any reasonable limits by the government.

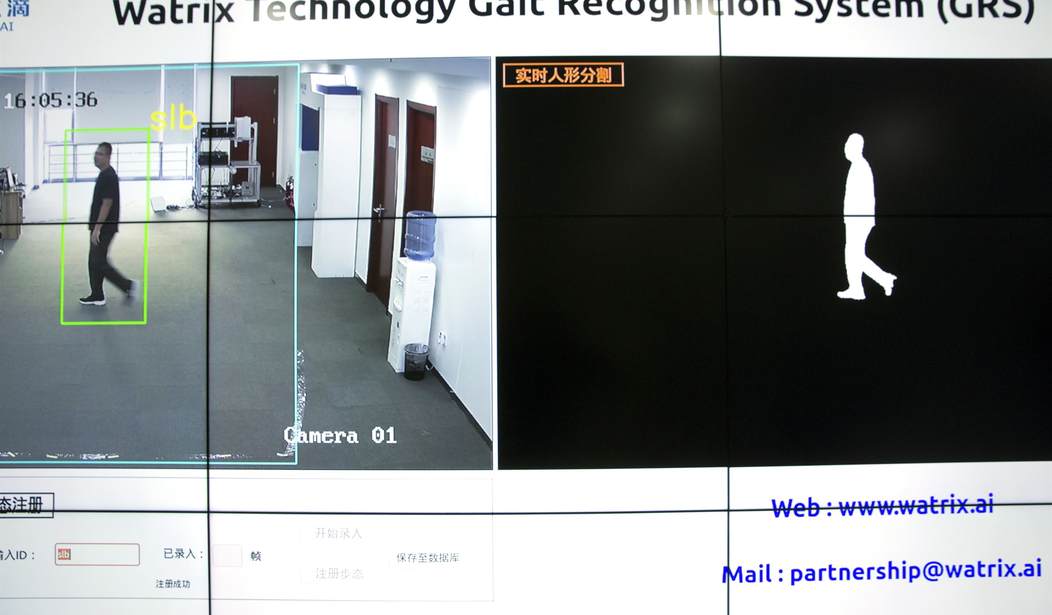

AI, unfettered by ethical considerations and legal restraints, will damage humanity in many unforeseen ways. As with nuclear power, it can be used for good or, as is human nature ever since Cain killed Abel, it can be used to kill, rob, and steal.

In their own crude way, scammers are tapping into AI. This is from a friend:

I am not a conspiracy theorist — in fact, I often argue that people need to apply just as much skepticism to alternative theories as they do to mainstream ones — but I’ve recently had some experiences that, frankly, I find a little disconcerting … today I got a “connection” request from a “woman” on LinkedIn who claimed she was a self-employed “executive director” (of what I never really found out — she also claimed to have started working “for the company” in 2014, which makes no sense).

Of course, I got the expected “hope we can be friends” message, so, out of curiosity, I began asking questions. The following exchanges were funny, but I think you’ll see what I found troubling too:

HER: You work in the field of explosive energy

ME: No. I work for an energy DRINK company.

ME: … So, tell me about going to the University of Edinburgh. I have always wanted to visit Scotland. It looks gorgeous!

HER: I think the University of Edinburgh is one of the oldest and most prestigious universities in Scotland, founded in 1583. It is located in the heart of Scotland’s capital city, Edinburgh, which is known for its fascinating architecture, rich history and vibrant cultural scene. Renowned for its excellence in research and teaching, the University of Edinburgh … [the description goes on and on].

HER: Which city in Scotland would you like to visit?

ME: After your detailed description, none. I know everything I need to know! LOL.

HER: Since my LinkedIn login is on my computer, we can set up a private connection if you don’t mind

ME: No, that’s not necessary.

… I think this—and other such exchanges I’ve had of late—are AI tests, and if this is true, AI is a lot further along than ChatGPT. Mind you, this exchange was far less subtle than others I’ve seen, but it really made me think of the potential dangers of completely made-up people and scenarios. It makes the ability to data mine a piece of cake, and that data can then be used to make the exchange even more credible. Be aware: We are on the precipice of a world in which fact and fiction will be exceedingly difficult to distinguish between.”

Yes, consider poor Professor Johnathan Turley. Following his reasoned legal commentary on the Trump arrest, which probably annoyed the mob, a fake AI story flooded the internet. As he put it, “…I learned that ChatGPT falsely reported on a claim of sexual harassment that was never made against me on a trip that never occurred while I was on a faculty where I never taught. ChapGPT relied on a cited Post article that was never written and quotes a statement that was never made by the newspaper”

Related: Techno-Hell: Bill Gates Rejects Elon Musk’s Plea to Pause AI Development Over Potential Dangers

There is a wide field of deception awaiting us.

And what of our poor Nigerian prince who needs you to wire the money to retrieve the lottery ticket so he can hire the lawyer to regain his rightful share of the estate so he can be named king and make you a rich prince? That story is a feature, not a bug. The Nigerian scam is designed to be stupid on the theory if you believe the unbelievable, you are more likely to do the unbelievable and send the money.

But what if the situation is now reversed? What if with AI to assist the scammers, you have to be a genius to know it is fake? And how many of us can be geniuses 24 hours a day? The time has come to start setting some judicious legal guardrails.

Join the conversation as a VIP Member