The controversy about apparent liberal bias in social media seems to have come to a head with the recent collaborative deplatforming of Alex Jones, along with Shopify renouncing its free speech pledge and removing storefronts from four gun and ammunition dealers, New York governor Andrew Cuomo pressuring banks to not do business with companies of which Como doesn’t approve — “Nice bank you have there. It would be a shame if the New York Attorney General decided to investigate.”

We at PJ have certainly had our own bad experience with this — Bridget Johnson was blocked from Twitter for weeks, Jim Treacher has been blocked twice in the course of a few days — and of course there are a dozen other examples.

When pressed about it, the answer is always “algorithms.” “It’s not our fault it’s the algorithms.” So what are they talking about?

An algorithm is nothing more than a procedure — a series of steps that lead to a result. The word is mostly used with reference to computer programs, but not necessarily — the way you learned to do long division is an algorithm.

The specific algorithms that are used come from the category of “machine learning” or more broadly “artificial intelligence.” These phrases sound science-fictional and cool but the reality is that all of these are doing something conceptually simple: the programs get inputs, process them in various ways, and present them to a person who says “you’re getting warmer” or “you’re getting colder.” This trains the program to get warmer as often as possible.

The potential weak spot here is the person in the loop. Imagine you’re Twitter and you have a machine learning algorithm you’re training to identify Nazis on Twitter. You put a person in the loop who thinks Trump is a Nazi and anyone who says anything favorable about Trump is a Nazi sympathizer. (They exist: I lost a couple of friends when they called me a Nazi sympathizer for just that reason.)

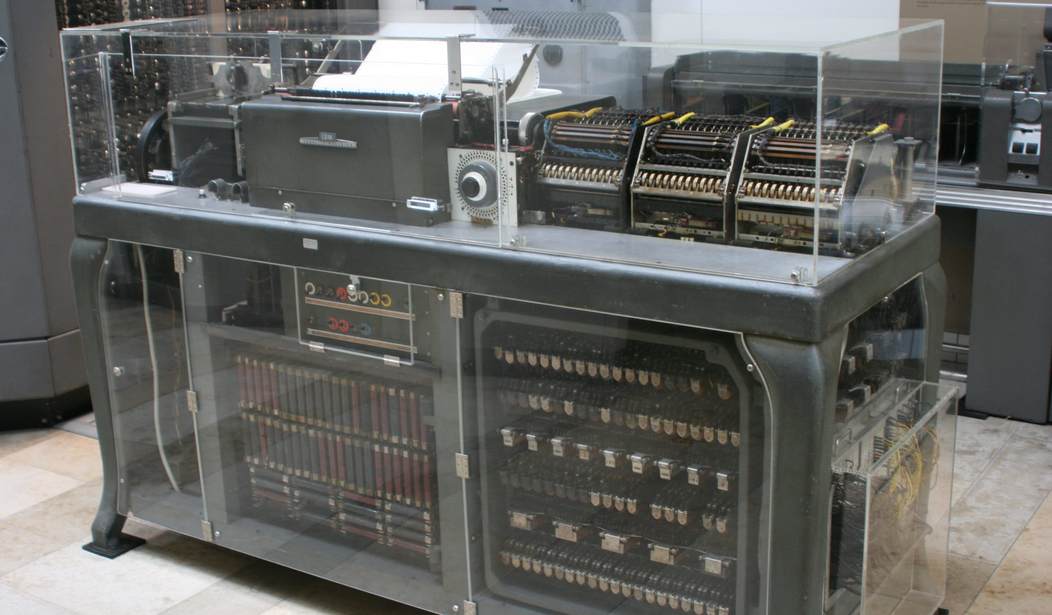

The algorithm spots someone liking the tax cuts: the person says, “he’s a Nazi.” The algorithm soberly notes that. It doesn’t know any better, it has no more understanding than an old-fashioned tabulating machine understood why it put the A and B cards into different bins.

Endorsed Brett Kavanagh? “He’s a Nazi.” In Congress with an (R) after your name? “Oh yeah, definite Nazi.”

Pretty quickly the algorithm will confidently identify any Republican, any Trump fan, or any independent who says #MAGA, as a Nazi.

So now we come to @Jack Jack Dorsey’s recent media blitz in which he said “yes Twitter leans left”, but that there was no bias in their algorithms.

I completely believe @jack in both statements, and I’m sure that if I had all the code to examine, there would be no code that looks at “#MAGA” and computes “Nazi”. The training — and the various weighting functions derived from that training — will mean the algorithm will deliver biased results nonetheless.

Of course, there will be a lot of false positives as everyone from Benjamin Netanyahu to John McCain to Alex Jones is marked “Nazi”.

Now, this is oversimplified — the questions are a lot more complicated, and there are more than just one social justice zealot training the algorithms. But the results are still predictable — many people will be identified as violating “community standards” where the actual offense is having an opinion other than what’s considered acceptable by a minimum wage intern in Menlo Park.

That the bias exists within Twitter is not news – there are the Project Veritas videos or look at this Reddit post:

There has been a lot of controversy with social networks censoring conservative views. I work at a major social network headquartered in the San Francisco Bay Area and it is absolutely true.

We have an algorithm that suppresses content that it deems bad. It is supposed to suppress abusive and spam messages. It ends up not working like that.

To train the model we have humans evaluate content to determine if it is abusive. The evaluators are given no clear instructions on what to consider abusive. The instructions say something to the effect of “Abusive content is hard to define. For these purposes, mark something as abusive if you think it doesn’t belong on the platform”. The human evaluators are a small set of employees who work in our Bay Area office. See #4 to understand the typical types of views of the people at the company.

To the companies credit they spent some amount of time to determine if it is biased. Determining if an algorithm is biased is really hard. It turns out there are many mutually contradictory definitions of AI fairness. The researchers decided on something like cross-group calibration. The idea is that when a liberal and conservative receive the same prediction on the probability of them being an abuser, when you look at the actual data, they should be an abuser at approximately the same rate.

The outcome of this study showed there was significant bias. If you are a liberal and are predicted to have a high probability of being an abuser, the actual data showed that your chance of being an abuser matched the predicted probability. If you are a conservative and are predicted to have a high probability of being an abuser, the actual data showed your chance of being an abuser was much lower than the predicted probability.

In summary the algorithm is biased to incorrectly give conservatives a much higher abuse score than their actual behavior justifies. However, these findings got distorted using some statistical slight of hand so that when they were summarized in a FAQ, it said the opposite and that it wasn’t biased. [Typos left in from source.]

This is some anonymous Reddit poster, not what I would call a strong source on its own, but there are an awful lot of these sources now, and it also makes sense technically.

If it were true what would we expect? We’d expect to see a lot of people restricted on the right and fewer on the left. We also expect to see people with big platforms or who can get publicity having their restrictions reversed. (See “Diamond and Silk” and PragerU.)

Which, after all, is exactly what is been happening. Facebook at least apologizes and says it was a mistake. Twitter often just removes the restriction with little or no explanation.

What’s the solution? Government regulation isn’t the answer – the government censors would just give @jack someone else to blame. And even if Trump were nominating the board now, do you want to trust President Warren’s nominees after 2024?

Antitrust? Ask someone like Glenn Reynolds — antitrust law is way outside my area of expertise. My personal bias is that antitrust regulation isn’t much preferable to direct regulation. On the other hand, it’s hard not to see collusion, even conspiracy, in the coordinated attacks on Alex Jones.

What is sure is that when you hear Facebook and @Jack proclaim that they may be biased but their algorithms are not, they are — consciously or unconsciously — not telling the whole story.

Join the conversation as a VIP Member