This past week Google held its developer conference where it talked about its future products. Google, along with Facebook and Apple, is making huge investments into artificial intelligence and augmented reality. Google Lens is its first implementation of these technologies.

Google CEO Sundar Pichai provided some details about Google Lens and claimed it is a major inflection point for the company. “All of Google was built because we started understanding text and web pages,” Pichai explained. “So, the fact that computers can understand images and videos has profound implications for our core mission.”

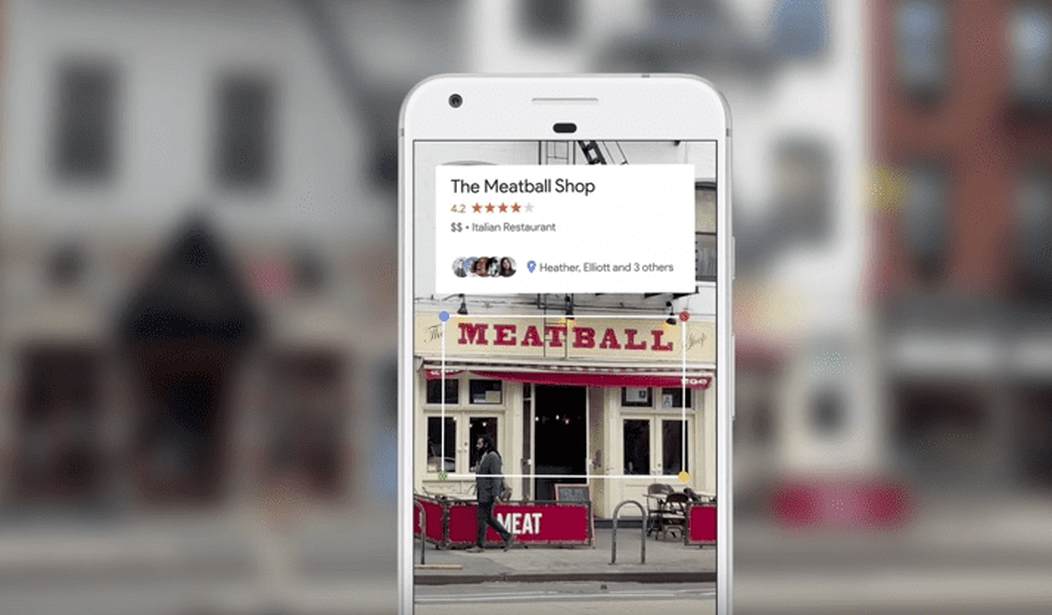

Google Lens will bring a level of intelligence to the phone that combines images with artificial intelligence and augmented reality, combining the real with the virtual.

Some of the examples he cited let you point your phone’s camera at a flower and its name, along with information about the flower, will be shown on the screen. Point it at a restaurant or another store and reviews and information will be shown above the store’s image. You’ll also be able to use Google Lens as a means of search. Point the camera at an object and it will tell you where you can buy it or other information about what it recognizes.

Google Lens will also be added to Google Photos and allow you to search them by what’s in the images, or modify the photos themselves. Pichai demonstrated how your images can be cleaned up using artificial intelligence. He used the example of taking a picture through a chain link fence and removing the fence from the picture automatically.

These areas of technology are the focus of Apple and Facebook as well, and many expect us to be fully engaged with apps using them before the end of the year. Facebook is pouring billions of dollars into developing virtual and augmented reality software. They envision that we’ll be able to just wear a special pair of glasses, and the information will be presented to us without the need for a phone. Apple CEO Tim Cook has praised the benefits of augmented reality and has hinted that the new iPhones being introduced this fall will make heavy use of it.

We can see some examples today of the potential. Vivino, a free app used by 23 million people, lets you take a picture of a wine list and see the ratings for each wine. It’s a multi-step process now: take the picture, enter it, wait for the processing to occur, and then view an image of the wine list with the ratings. With augmented reality, it will be simpler: just point the camera at the list and see the information displayed alongside it. Once the basics are enabled in phones and other devices, a huge community of developers will create exciting new applications.

The conference brought other news as well. Google Assistant is currently now being used on 100 million devices. Google Assistant is a voice-enabled service, much like Siri for Apple, but many think it works better, ironically because Apple collects less personal information, so it has less of your information than Google for answering your queries.

Google also announced that Google Assistant is now available on the iPhone as a downloadable app and the company is actively encouraging other manufacturers to add Google Assistant to their products. One example provided is a drink mixer that enables you to ask Assistant for the recipe of the drink you’re making.

While many of these new features are exciting and useful, they’ll inevitably add complications to the usability of the phone for some. While we’ll get new benefits, the challenge will be able to use them without becoming frustrated. Perhaps that’s why the sales of flip phones are increasing.

Join the conversation as a VIP Member