If you’re a big name advertiser, it stands to reason that you don’t want to taint your brand by having it associated with racists or other vile people who populate so much of the internet. For Google, the largest internet advertising provider, this has been a serious problem. Now, they’re trying to address it in order to put advertisers at ease.

Over the years, Google trained computer systems to keep copyrighted content and pornography off its YouTube service. But after seeing ads from Coca-Cola, Procter & Gamble and Wal-Mart appear next to racist, anti-Semitic or terrorist videos, its engineers realized their computer models had a blind spot: They did not understand context.

Now teaching computers to understand what humans can readily grasp may be the key to calming fears among big-spending advertisers that their ads have been appearing alongside videos from extremist groups and other offensive messages.

Google engineers, product managers and policy wonks are trying to train computers to grasp the nuances of what makes certain videos objectionable. Advertisers may tolerate use of a racial epithet in a hip-hop video, for example, but may be horrified to see it used in a video from a racist skinhead group.

That ads bought by well-known companies can occasionally appear next to offensive videos has long been considered a nuisance to YouTube’s business. But the issue has gained urgency in recent weeks, as The Times of London and other outlets have written about brands that inadvertently fund extremists through automated advertising — a byproduct of a system in which YouTube shares a portion of ad sales with the creators of the content those ads appear against.

It’s a justified concern for those brands. Unfortunately, it raises concerns in a different direction.

The “engineers, product managers, and policy wonks” described are people, and that introduces a potential problem. What are these people’s biases and will they use that to influence the training the advertising bots will get?

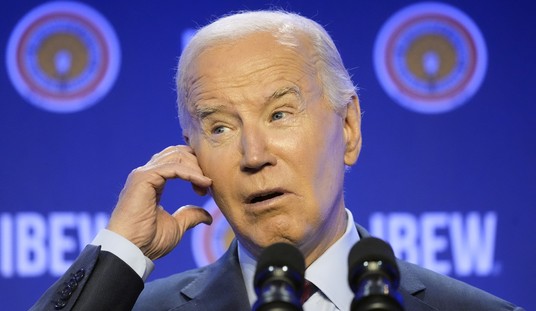

For example, a large portion of the criticism of President Obama was labeled as racist. How will that come into play? Will these computers be able to tell the difference between a video of a commentator who takes issue with affirmative action and a video of a Klan member railing against interracial marriage?

It’s not an unreasonable concern. After all, Twitter began something called “shadowbanning,” where some people found their tweets not being distributed like other people’s. To imagine Google employees letting their own personal biases influence what they think of as “context” isn’t out of the realm of possibility.

Only time will tell whether Google can get it right or not, but perhaps the tech giant will bear in mind that advertising only works if potential customers can view the ads. Provide too much in the way of biased context and it will open the door for someone with either less bias or more of an ability to control it to take Google’s place at the top of the tech heap.

Join the conversation as a VIP Member