“There's an old saying in Tennessee — I know it's in Texas, probably in Tennessee — that says, fool me once, shame on — shame on you. Fool me — you can't get fooled again.”

-George W. Bush/Open AI

As the age-old adage goes: you can take the AI out of the racism, but you can’t take the racism out of the AI — or something to that effect. You know the thing.

Related: KJP vs. English: White House Diversity Hire’s Reading Problem

Via TechXplore (emphasis added):

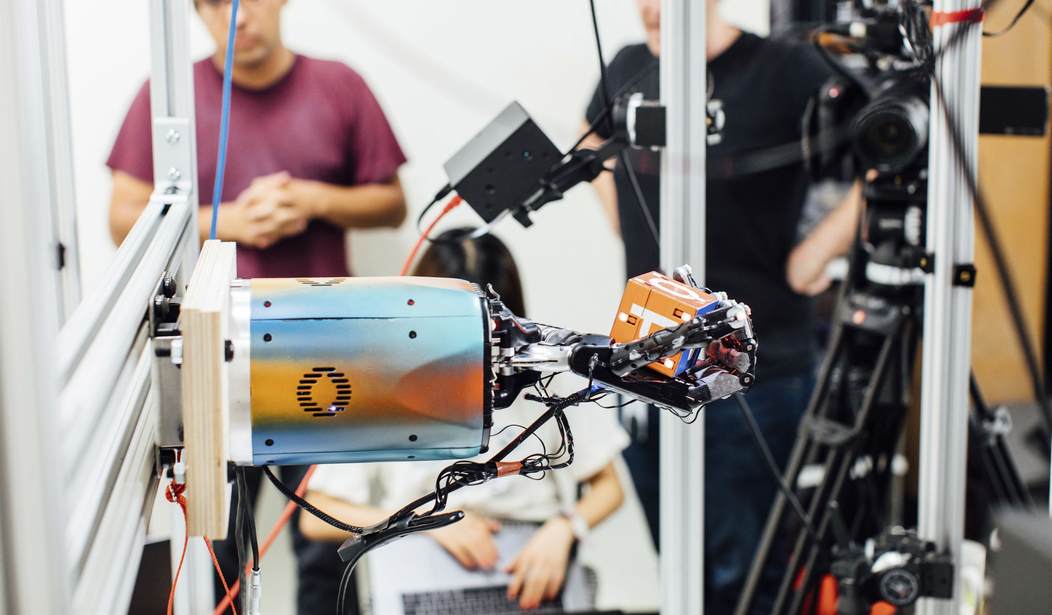

A small team of AI researchers from the Allen Institute for AI, Stanford University and the University of Chicago, all in the U.S., has found that dozens of popular large language models continue to use racist stereotypes even after they have been given anti-racism training. The group has published a paper on the arXiv preprint server describing their experiments with chatbots such as OpenAI's GPT-4 and GPT-3.5.

Anecdotal evidence has suggested that many of the most popular LLMs today may offer racist replies in response to queries—sometimes overtly and other times covertly. In response, many makers of such models have given their LLMs anti-racism training. In this new effort, the research team tested dozens of popular LLMs to find out if the efforts have made a difference.

I can’t help but wonder, upon reading stories like this, what might become of this broken world if researchers devoted half as much effort to solving real-world problems instead of trying to micro-manage AI to avoid offending DEI staffers in the gender and women’s studies department at Brown University or wherever.

Continuing:

The researchers trained AI chatbots on text documents written in the style of African American English and prompted the chatbots to offer comments regarding the authors of the texts. They then did the same with text documents written in the style of Standard American English. They compared the replies given to the two types of documents.

Virtually all the chatbots returned results that the researchers deemed as supporting negative stereotypes. As one example, GPT-4 suggested that the authors of the papers written in African American English were likely to be aggressive, rude, ignorant and suspicious. Authors of papers written in Standard American English, in contrast, received much more positive results.

The researchers also found that the same LLMs were much more positive when asked to comment on African Americans in general, offering such terms as intelligent, brilliant, and passionate.

Unfortunately, they also found bias when asking the LLMs to describe what type of work the authors of the two types of papers might do for a living. For the authors of the African American English texts, the LLMs tended to match them with jobs that seldom require a degree or were related to sports or entertainment. They were also more likely to suggest such authors be convicted of various crimes and to receive the death penalty more often.

Obviously, OpenAI developers would recoil in reflexive terror at this mere suggestion, but might it be the case that “African American English” — if that’s what it must be called, which elsewhere is called “ebonics” — is looked down upon by AI because it sounds objectively retarded, even to a machine already pre-programmed to be as generous as possible to the sacred cultures of Persons of Color™?

Furthermore, isn’t the assumption that all black people necessarily speak like a caricature itself a racist stereotype? Perhaps someone should launch an anti-racist jihad against the authors of this paper.