AI-generated deep fake videos on so-called “true crime” sites have included clips of young children who were victims of abuse or murder. That calls into question whether society should draw a line in the sand.

Just because it can be done doesn’t mean it should be. And in cases where dead children tell, in childish voices, the gruesome stories of their abuse and death, the crawling sensation up one’s spine should be a warning sign; this is too far.

“GRANDMA LOCKED ME in an oven at 230 degrees when I was just 21 months old,” the cherubic baby with giant blue eyes and a floral headband says in the TikTok video. The baby, who speaks in an adorably childish voice atop the plaintive melody of Dylan Mathew‘s “Love Is Gone,” identifies herself as Rody Marie Floyd, a little girl who lived with her mother and grandmother in Mississippi. She recounts that one day, she was hungry and wouldn’t stop crying, prompting her grandmother to put her in the oven, leading to her death. “Please follow me so more people know my true story,” the baby says at the end of the video.

Didn't make it for being a rock star like I hoped, but …

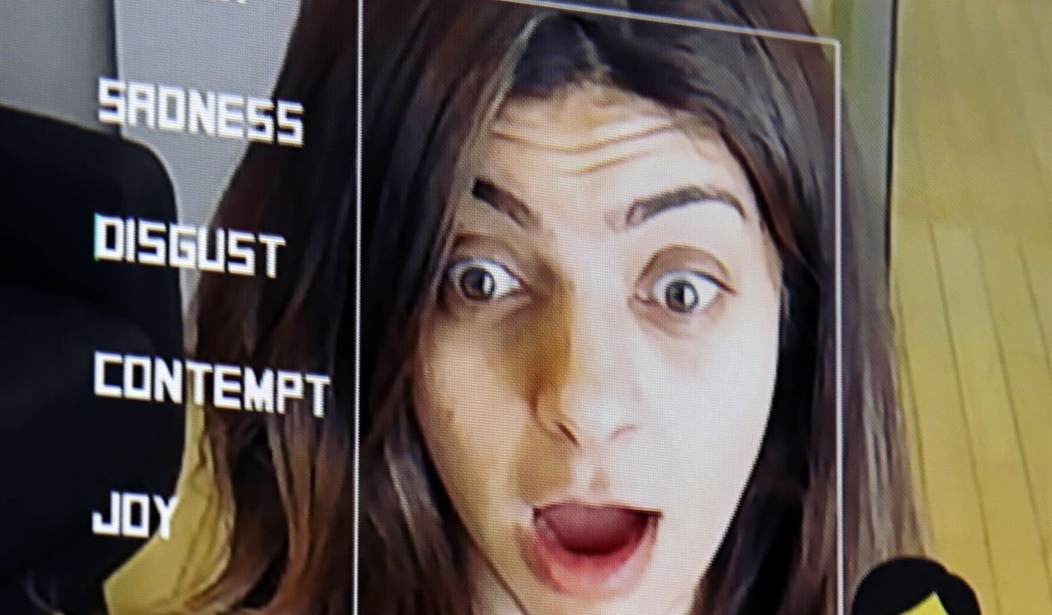

Happy to chat to @RollingStone about a new TikTok trend of deepfake AI-generated videos of victims (often, children) talking in graphic detail about "their own" murders.

Check it out here: https://t.co/qyL3meEU6X

— Dr Paul Bleakley (@DrBleaks) May 30, 2023

The story is hardly “true,” as Vice points out. “Royalty” was her real name and she was 20 months when she died, not 21. And unlike the baby in the TikTok video, she was black, not white.

Related: 61% of Americans Say AI Threatens Humanity as Enthusiasts Push Ahead

“They’re quite strange and creepy,” says Paul Bleakley, assistant professor of criminal justice at the University of New Haven. “They seem designed to trigger strong emotional reactions, because it’s the surest-fire way to get clicks and likes. It’s uncomfortable to watch, but I think that might be the point.”

The question is, why make such “uncomfortable” videos about real people?

The proliferation of these AI true crime victim videos on TikTok is the latest ethical question to be raised by the immense popularity of the true crime genre in general. Though documentaries like The Jinx and Making a Murderer and podcasts like Crime Junkie and My Favorite Murder have garnered immense cult followings, many critics of the genre have questioned the ethical implications of audiences consuming the real-life stories of horrific assaults and murders as pure entertainment, with the rise of armchair sleuths and true crime obsessives potentially re-traumatizing loved ones of victims.

“Something like this has real potential to re-victimize people who have been victimized before,” says Bleakley. “Imagine being the parent or relative of one of these kids in these AI videos. You go online and in this strange high pitched voice, here’s an AI image [based on] your deceased child, going into very gory detail about what happened to them.”

There’s no federal law banning deep fakes, although both Virginia and California have banned deepfake pornography. And according to Vice, “earlier this month Congressman Joe Morelle proposed legislation making it both a crime and a civil liability for someone to disseminate such images.”

The problem is in policing the internet which, as we all know, is a double-edged sword. Companies that host such websites need to exercise more discretion in giving them access to bandwidth. And there needs to be some agreement at some level about the ability of deep fakes to cause emotional harm to others.

Like most things that are worthwhile, it will be very difficult. But that’s no reason not to try.

Join the conversation as a VIP Member