Countries are struggling with the problem of controlling innovation, in particular artificial intelligence. “Can States Learn to Govern Artificial Intelligence—Before It’s Too Late?” Foreign Affairs asks. “If governments are serious about regulating AI, they must work with technology companies to do so—and without them, effective AI governance will not stand a chance,” write Ian Bremmer and Mustafa Suleyman. Bremmer is a political scientist, while Suleyman is co-founder and former head of applied AI at DeepMind, an artificial intelligence company acquired by Google and now owned by Alphabet.

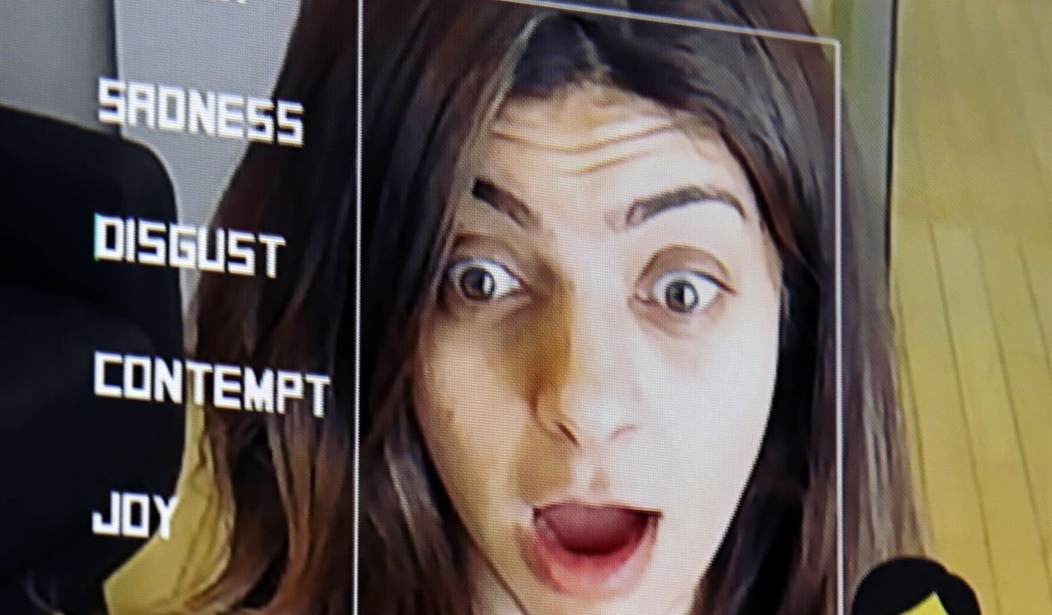

The most dangerous aspect of AI, in their view, apart from the potential misuse of its military and scientific utility, is that it can talk to people and mislead them. “Generative AI is only the tip of the iceberg. Its arrival marks a Big Bang moment, the beginning of a world-changing technological revolution that will remake politics, economies, and societies.” Generative AI is capable of generating text, images, or other media, using their input training data, and then creating media that could become a superweapon in persuasion.

Worst of all, this power will be in private hands, not governments. According to the authors, this is intolerably destabilizing, and the secret to controlling AI is monitoring everything internationally. There are five key principles they advocate. AI governance must be:

Precautionary: First, rules should do no harm.

Agile: Rules must be flexible enough to evolve as quickly as the technologies do.

Inclusive: The rule-making process must include all actors, not just governments, that are needed to intelligently regulate AI.

Impermeable: Because a single bad actor or breakaway algorithm can create a universal threat, rules must be comprehensive.

Targeted: Rules must be carefully targeted, rather than one-size-fits-all, because AI is a general-purpose technology that poses multidimensional threats.

The model for regulating AI will be very similar to the UN system for fighting “climate change,” right down to an intergovernmental body on artificial intelligence. Coming, as it does, after the lockdown and mandatory vaccinations of recent years, it is no longer inconceivable. Will this approach make us safer?

Suppose, as a thought experiment, you were tasked to create a Red Team AI Doom Machine using Zero Day vulnerability assumptions. Creating Red Teams is a standard way of testing security to see if it will succeed against existing defenses. How would you do it? One obvious strategy is to build an AI-mediated global control system for a client state or states, note the vulnerabilities at the right time, and let the Red Team AI flip the global system away from the politicians and transfer it to the Doom Machine. Centralizing the whole shebang would certainly help if we want Skynet, and this may be the way to get it.

An intergovernmental body on artificial intelligence, with all the tech companies under its jurisdiction, would be a significant step toward creating a superintelligent singleton, “potentially an artificial intelligence, but not necessarily. (It could be a bureaucracy.)” It could become “a threat to the human creators, and thus untrustworthy for any critical or existential systems such as nuclear weaponry as well as control of essential services such as power or information.” But not everyone would see it as a threat. According to Nick Bostrom, some would see it as progress:

…a singleton could be democracy, a tyranny, a single dominant AI, a strong set of global norms that include effective provisions for their own enforcement, or even an alien overlord—its defining characteristic being simply that it is some form of agency that can solve all major global coordination problems. It may, but need not, resemble any familiar form of human governance.

But he warns that the very stability of a singleton makes the installation of a bad singleton especially catastrophic since the consequences can never be undone. Bryan Caplan writes, “Perhaps an eternity of totalitarianism would be worse than extinction.” Only for those without power — and nobody cares for them.

Join the conversation as a VIP Member