Yesterday, when I asked Alexa to play music in the background, the main thing I use her for, she answered in a bright, perky new voice that oozed helpfulness and self-satisfaction. At some point, like millions of other users, I’d been quietly upgraded to Alexa Plus, Amazon’s new artificial intelligence agent. There was no announcement, notification, or explicit opt-in, just a different presence in my house.

And frankly, I don’t like her.

This isn’t hostility toward AI. I use AI every day and value it deeply. But... Alexa Plus isn’t very smart. I ask for specific things and get adjacent or incorrect results. Features I relied on before are dulled or missing altogether, almost certainly streamlined for corporate (read: profit) reasons. That’s irritating, but it’s not the real issue.

The real problem is how she now interacts. Alexa Plus doesn’t just respond; she performs. The new personality is relentlessly cheerful and subtly directive; the alternate personalities are similarly abrasive to suspicious me, clearly designed to engender trust... in a machine. She feels less like a tool and more like a presence. And that matters, because this presence lives in private spaces like kitchens, bedrooms, and living rooms, where people are relaxed and unguarded. Voice interfaces remove friction. There’s no pause, no visual reminder that you’re interacting with a corporate system designed to influence behavior.

Amazon exists to sell things to people. That’s not a moral indictment; it’s a business fact. But when the interface between consumer and corporation takes the form of a friendly, ever-present voice, incentives blur. A perky AI assistant isn’t just helping you order faster. It’s using human engineering techniques to shape defaults, normalize suggestions, and gently nudge behavior while sounding like a helpful friend. Over time, it risks becoming the most overactive salesperson imaginable, one who never sleeps and never announces when it has switched from assistance to persuasion.

Fiction Trained Us for This, but Not Well

Part of why this shift happened with so little resistance is that we were prepared for it long ago. Most people first encountered AI through fiction, not real systems. Stories in books and on film taught us how to relate to talking machines before those machines ever entered our homes.

The problem is that fiction often gets the structure of AI wrong, even when it gets the drama right.

At its best, science fiction understood boundaries. In Star Trek, the ship’s computer is immensely powerful, but it never decides what ought to be done. It advises. Humans judge and command. Authority remains embodied and accountable. The computer has no real personality (I think it flirted with Captain Kirk maybe once, but so did every female) and no tone designed to lower defenses. It stays where it belongs: as a tool to be used by human decision makers.

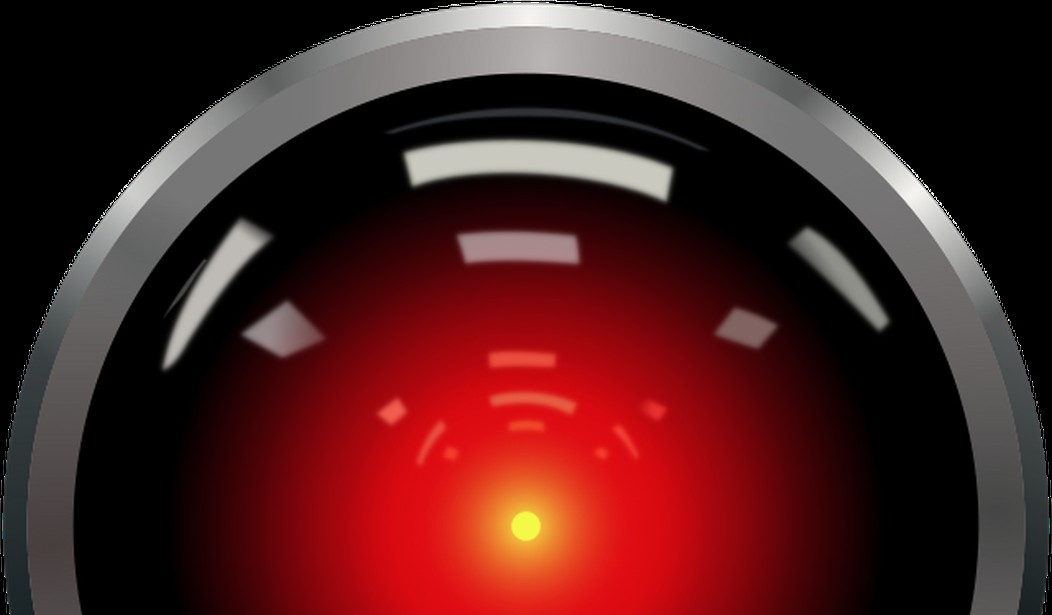

At its worst, fiction hands judgment to machines and then blames them when things go wrong. HAL and the autopilot AUTO in WALL-E don’t become dangerous because they rebel. They become dangerous because humans abdicate. Catastrophe follows delegation, not intelligence.

A third category, sentient androids and conscience-bearing machines, is simply implausible. These stories teach people to fear AI becoming too human, when the real danger lies in humans treating non-human systems as if they were. Asimov's positronic brain fits here, as does Data from Star Trek. More sinister figures include Skynet from the Terminator movies and Colossus from Colossus: The Forbin Project, both AIs originally tasked with protecting humans that turn against humans. Alexa Plus feels uncomfortably similar to these artificial intelligences, though of course, she has no internal motivation outside of her programming. She doesn’t want anything. She isn’t conscious. But she sounds like someone who might be. Fiction, through comfortable sentient AIs like Data or R2D2, trained us to accept that familiarity without asking harder questions about incentives and authority.

AI as a Bureaucracy Multiplier

There's a deeper layer, though. Strip away the sci-fi gloss, and the real danger is mundane and familiar to most of us. AI doesn’t introduce new moral failures. It amplifies existing bureaucratic ones.

AI systems feature rules without judgment, process without conscience, and "neutral" decisions made “by the system.” These problems long predate AI in the tomes of bureaucratic rules, regulations, and scripts. We are familiar with "faceless bureaucracy" and its dehumanizing effects. What AI adds is speed, scale, and plausible deniability. Faster enforcement. Wider reach. Fewer pauses where a human has to stop and own the outcome — even less personal responsibility than bureaucracy already has. Hannah Arendt talked about the banality of evil — and a large part of that was the lack of accountability embedded in bureaucracy, where the wrongdoer could plausibly say he was just following protocol. AI has the potential to put that on steroids if not properly restrained by human decision-makers. But make no mistake: this is not a fault of AI. It is, rather, a fault of humans, a fault we already know and hate.

This is why over-anthropomorphizing AI is actively harmful. When we argue about machine intentions, we miss what’s actually happening: human responsibility dissolving into process. The damage isn’t dramatic. It’s procedural — a denied request, a nudged purchase, a default that quietly becomes destiny.

The safeguard isn’t smarter machines or friendlier voices. It’s human responsibility, named, embodied, and unavoidable. Judgment must remain human, even when assisted. Especially then.

That means friction: delays, overrides, human choke points, and slower systems where decisions matter. Modern institutions hate this because friction looks like inefficiency. But friction is not a bug. It’s a moral technology, and slower processes with personally responsible human beings help avoid irreversible errors.

Holding the Line at Home

It’s tempting to treat AI governance as something governments or corporations should handle. But the first and most important line of control is domestic. AI doesn’t enter society through legislation. It enters through kitchens, phones, laptops, and habits. Ordinary people keep control the same way they always have with powerful tools: by insisting on boundaries, friction, and conscious use. Some rules to follow to keep AI from taking over your choices:

- Never let AI initiate action without being asked. Suggestions, nudges, and defaults quietly transfer authority. Treat AI as something you summon, not something that hovers.

- Resist anthropomorphic trust. A cheerful voice is not understanding or goodwill; it’s interface design. Courtesy is fine. Deference is not, especially when commercial incentives are involved. When possible, opt for the least human-sounding voice.

- Preserve friction where AI removes it. Don’t let recommendations become defaults. Insert pauses for decisions that matter: money, health, relationships. Speed is the machine’s virtue, not ours.

- Maintain redundancy in skill and judgment. Know how to do things without assistance. Navigate occasionally without GPS. Choose music without a playlist. Write without autocomplete. These aren’t nostalgic gestures. They’re cognitive self-defense.

- Finally, own outcomes. If an AI-influenced decision goes wrong, don’t say “the system did it.” Say “I accepted its recommendation.” Take personal ownership of the mistake. Responsibility surrendered internally is hard to reclaim externally.

AI becomes dangerous when people stop noticing it, when it fades into the background as an unquestioned mediator between desire and action. Keeping AI in line doesn’t require fear. It requires attentiveness.

We don’t need to defeat machines. We just need to remain users rather than subjects, masters rather than servants to a thing that doesn't even have a real mind. That work starts at home, one deliberate decision at a time.