I was watching a couple of YouTube videos by Steve Lehto, who does a lot of idiosyncratic stuff commenting on law news. In this video, Steve talks about a lawyer who has gotten himself in trouble for having ChatGPT write a brief in a liability lawsuit for him, including citations, and got caught because the citations were fictitious.

In his defense, he said he did check the citations by asking ChatGPT if those citations were real, and it assured him they were.

In a recent New York Post story, a professor at Texas A&M failed several classes—everyone in the class—for using ChatGPT to write an assignment. How did he know? Why, he copied the assignments into ChatGPT and asked, “Did you write this?” ChatGPT answered it had, so everyone got a 0 on the assignment.

Not how this works

As the estimable lady in the GIF says, that’s not how this works. That’s not how any of this works.

In fact, there’s a problem even talking about this stuff because we use verbs like “says,” “writes,” or even “creates,” and they all have that connotation that there is someone inside saying or writing things. The underlying problem is that people think there’s really someone in there. A real, sentient entity. And there’s just not.

A variant of this fallacy is when people talk about how the AI “hallucinates” things if it comes out with something it “made up.” I suspect that’s already become a term of art in AI, as much as that particular locution deserves to be dropped in the killing bottle. (I’m darkly suspicious that the AI community isn’t resisting because, consciously or unconsciously, they really want people to believe there is someone in there.) But to understand that, we have to understand what the AI is really doing.

The start: spelling checkers

To explain what the AI is doing, we can start back in pre-history with the original UNIX Version 7 spell(1)

This worked very simply: it read each file and went word-by-word, checking against a dictionary. If the word wasn’t there, it wrote it out in a list of misspelled words, leaving the user to find and correct the misspelled words. That was simple enough — the hardest part was probably getting someone to type the original word list — and it really was helpful, even in those days of stone knives and bearskins.

But it had one weakness — it was easy to find and change places where you wrote “teh” or “recieve,” but not so much for a word like “floccinaucinihilipilification.” (And yes, that is a real word, even if it is worthless.)

Suggested replacements

So the next step was to make a program that could not only identify misspelled words but could suggest replacements. That requires more computer power, and also more math.

There are two parts to this, built around a concept of “edit distance,” which is a count of the primitive edit operations — single-letter insertions, deletions, or substitutions — needed to go from one word to another. The original idea came from a Soviet mathematician, Vladimir Levenshtein, in 1965. (Levenshtein distance turns out to show up in more important areas, like bio-informatics and genetics, where it applies to gene sequencing.) The basic idea is that if you type “Levinstein,” it can easily compute that the distance from “Levinstein” to “Levenshtein” is short, but the distance to “zebra” is long, so it suggests “Levenshtein.”

This is still done on a word-by-word basis, though. The next step was to suggest spellings as you type, the basis of the infamous autocorrect.

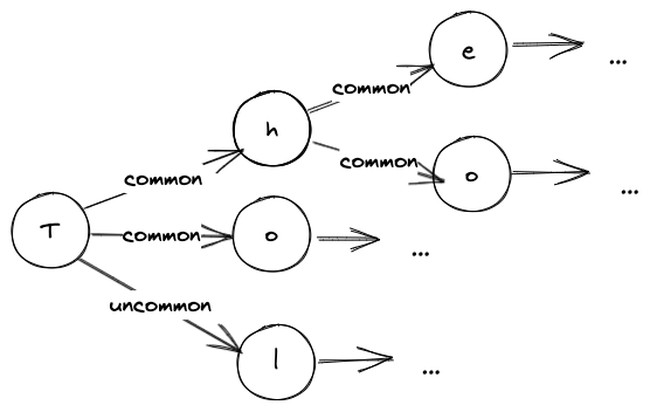

I don’t have access to the code that Apple uses for autocorrect, but it’s easy enough to reverse-engineer the approach they use. To make it work, our program has to go letter-by-letter instead of word-by-word. In English — just as an example, this approach will work even for languages like Japanese and Chinese — we can take a big collection of correctly-spelled text and compute the probability of any letter following a particular letter. So, for example, “T” is often followed by “h,” very rarely by “l” since the Aztec god Tlaloc doesn’t come up very often in conversation. So the statistics of English are transformed into a model in which the possible next letters are laid out by probability.

(By the way, this is known as a “Markov model.” This whole topic is full of Russian mathematicians.)

Language models

All of these approaches, even the simple 1970s-style spell checker, depend on some body of knowledge about English from which they work. (Even spell(1) depends on a dictionary.) In general, these collections of knowledge are called language models.

Modern versions of autocorrect have a deeper language model. Instead of a Markov model of the frequency of individual letters, they model the frequency of full words in succession. So, let’s say you type just the letter “T.” Just as we say above, the next letter in English following a “T” is commonly either “H” or “O,” and very uncommonly it’s an “L.” (These are in ALL-CAPS so it’s easier to see. The models work just fine with normal case.)

After “TH,” the most common next letter is “E” because “THE” is the most common word in English. So after typing “TH,” the Markov model suggests “E” is the next letter and, using probability, generates a suggestion of “E” to complete the word “THE.”

The model probably has some other extensions; for example, a really common typo is “TEH” for “THE,” so there’s probably an additional branch that represents that particular typo and corrects it.

Large language models

We can abstract this mathematically using conditional probability, which basically says, “Given these things that are already known, what is the probability of this coming next?”

In good old spell(1), that’s very simple: “Given the existing dictionary, what is the probability that this word is spelled correctly?” In this case, it’s basically answering a probability of 1 or 0: it’s either in the dictionary, so it’s spelled correctly, otherwise not.

If the program is able to make suggestions, then it completes the most probable replacements by looking for words that are “close” in terms of Levenshtein distance and suggesting the most probable replacements. Add in predictive autocorrect — “you typed TEH, which is not a word, but THE is, so correct the TE to TH.” But all of these basically have a model of what correct English is like, and make predictions based on that model.

ChatGPT and its kin — there are probably a hundred tools using this same approach, but I’m just going to say “ChatGPT” as a catch-all for all of them — use an immense amount of text to train their models, but the overall model idea is the same: Given what we know so far, what are the most probable next chunks of text?

It’s a large language model because spelling checkers are using a language model of 50-100 thousand words. ChatGPT-4, the newest model, was trained on 500-600 gigabytes of text using a specialized supercomputer built by Microsoft Azure. That’s something like 10 million times as much input.

No human could do all the tabulation to build a language model for that much text. Instead, GPT models are built using machine learning based on neural networks. I plan in a later article to explain in some detail how a neural network works, but I promised the editors not to put much math into this article. But, basically, what the neural network is doing is using the training input, and a truly gigantic but essentially simple polynomial with many many terms — it’s been reported that GPT-4 has 100 trillion parameters — and uses that input to basically fiddle with those parameters until the whole collection can predict plausible output text reasonably reliably.

This is pretty abstract, so let’s look at a real example. Say I ask ChatGPT for ten suggested endings for the prompt “The quick brown fox.” I get back:

- The quick brown fox jumps over the lazy dog.

- The quick brown fox ran through the forest.

- The quick brown fox caught its prey and feasted.

- The quick brown fox disappeared into the night.

- The quick brown fox found a cozy den to rest in.

- The quick brown fox howled at the moon.

- The quick brown fox left footprints in the snow.

- The quick brown fox outsmarted the hunter.

- The quick brown fox explored the countryside.

- The quick brown fox lived happily ever after.

The most probable ending is “jumped over the lazy dog,” and, in fact, before I asked for ten endings, that was what it offered. Asked for ten endings it has to dig a little deeper. It does so by referring back to the context, something called “attention.”

What it is not doing

What it is not doing is understanding the conversation. Do you remember the old game Mad Libs? The basic idea of Mad Libs is to take a piece of prose and replace some words with blanks labeled with the part of speech of the word. What ChatGPT and similar tools do is like Mad Libs on steroids: taking your prompt as a start, it generates more words based on what the most likely next words are.

In fact, ChatGPT can generate Mad Libs. Here’s part of one I just generated:

Write me a madlib about politics

Politics can be a (adjective) topic, especially during election season. Politicians often (verb) about the issues that matter most to them, hoping to win over the hearts and minds of voters.

However, politics can also be (adjective) at times, with candidates making (adjective) promises that they may not be able to keep. It’s important to do our own (noun) and research the issues before casting our votes.

Here’s how it filled this in:

Using the previous response, fill in the blanks with the funniest responses

Sure, here’s a filled-in madlib with some funny responses:

Politics can be a wacky topic, especially during election season. Politicians often dance about the issues that matter most to them, hoping to win over the hearts and minds of voters.

However, politics can also be bonkers at times, with candidates making ridiculous promises that they may not be able to keep. It’s important to do our own research on the best meme to decide who to vote for.

Why go through all this?

There has been a lot of excitement and speculation about ChatGPT, AI, and the perils of AI in general. Much of it is actually about the perils of AGI, artificial general intelligence. This is hard to talk about because, honestly, we’re not sure what exactly natural general intelligence is. (Look back at the controversy over Herrnstein and Murray’s The Bell Curve, for example.)

What ChatGPT and similar tools do is give a plausible illusion of intelligence by writing text that its language model says is very probably good text, convincing text. But it is an illusion — it’s just running through its gigantic statistical model, generated by using a gigantic corpus of prose. AI programs go back to Joseph Weizenbaum’s ELIZA in the ’60s. As he described in a paper in 1966, Weizenbaum was surprised to find that people interacting with the program — specifically with the DOCTOR version that used a simple model to simulate a Rogerian therapist — attributed thought and even emotion to the program.

AI chat programs like ChatGPT are conceptually the same: they have a language model and generate texts based on that language model.

These programs are much more convincing than ELIZA was. The hazard isn’t that AI LLMs are going to turn into conscious and malevolent entities like Skynet or Colossus. The hazard is people — like the college professor and the lawyer mentioned at the beginning — being taken in by the illusion.

There are many very promising applications of AI coming out of these models, and I’ll have another article soon talking about what AI is good for. (One example is suggesting titles; that’s how I got the title of this piece.) But they’re tools, like a saw, a screwdriver, or a car. And if something goes wrong, just like any tool, you can’t blame the tool. You have to blame the user.